Welcome to the latest installment of the Brief Bio series, where I'm writing up very informal biographies about major figures in the history of computers. Please take a look at the Brief Bio archive, and feel free to leave corrections and omissions in the comments.

John von Neumann

Since Ada Lovelace's death, there's a pretty big lull in notable computer-related activity. World War II is the main catalyst for significant research and discovery, so that's why I'm skipping ahead to figures involved in that period. If you think there's someone worth mentioning that I've skipped over, please do so in the comments.

But now, I'm skipping ahead about a half of a century to 1903, which is when John von Neumann was born. He was born in Budapest, in the Austro-Hungarian Empire to wealthy parents. His father was a banker, and John Von Neumann's precociousness, especially in mathematics can be at least partially attributed this to his father's profession. Von Neumann tore through the educational system, and received a Ph.D. in mathematics when he was 22 years old.

Like Pascal, Leibniz, and Babbage, Von Neumann contributed to a wide variety of knowledge areas outside computing. Some of the high points of Von Neumann's work and contributions include:

He also worked on the Manhattan Project, and thus helped to end World War II in the Pacific. He was present for the very first atomic bomb test. After the war, he went on to work on hydrogen bombs and ICBMs. He applied game theory to nuclear war, and is credited with the strategy of Mutually Assured Destruction.

His work on the hyrdogen bomb is where Von Neumman enteres the picture as a founding father of computing. He introduced the stored-program concept, which would come to be known as the Von Neumann architecture.

Von Neumann became Commissioner of the United States Atomic Energy Program. Here's a video of Von Neumann, while in that role, advocating for more training and education in computing.

In 1955, Von Neumann was diagnosed with some form of cancer, possibly related to his exposure to radiation at the first nuclear test. Von Neumann died in 1957, and is buried in Princeton Cemetary (New Jersey).

I encourage you to read more about him in John Von Neumann: The Scientific Genius who Pionered the Modern Computer, Game Theory, Nuclear Deterrence, and Much More (which looks to be entirely accessible on Google Books).

Welcome to another "Weekly Concerns". This is a post-a-week series of interesting links, relevant to programming and programmers. You can check out previous Weekly Concerns posts in the archive.

- 5 Things You Should Stop Doing With jQuery. It's a sensational title, but read all the way to the end before you judge.

- PostSharp 3.2 Preview 2 is available, with improvements that help you write multi-threaded code (among other things).

- Direct casting vs "as" casting in C#

- Check out the new and improved WordPress Code Reference.

If you have an interesting link that you'd like to see in Weekly Concerns, leave a comment or contact me.

I've recently wrapped up work on a project, so I thought I'd share what I've learned, specifically in regards to Fluent Migrator (which I introduced in a previous post).

Mostly, it went pretty good, but there were a few bumps along the way, and a few things that still aren't as elegant as I'd like. As always, your mileage may vary.

1) Fluent Migrator and Entity Framework do okay together. I'm sure that Entity Framework Code First Migrations probably make a little more sense, but this project wasn't exactly using EF in a textbook fashion anyway.

2) Fluent Migrator works great with Octopus Deploy. But maybe not for rolling back. I've found that rolling back is typically something I only do when I'm developing. By the time I check in a migration, it's pretty much not getting rolled back, and certainly not by Octopus. There are a handful of migrations where a rollback doesn't really work anyway (how do you rollback making a varchar field bigger, for instance?) So, my thought with rollbacks is: do the best you can, don't worry if your rollbacks aren't perfect, and after the migration is committed and/or deployed, consider "rolling forward" instead of rolling back.

3) Fluent Migrator is great for tables. It is not so great with views/sprocs/functions/etc. I didn't really have a plan for these when I started. Fluent Migrator can use embedded script files--that's the direction I went with it. But I'm not terribly happy with it: seems like a lot of repetition and/or ambiguity.

4) Similarly, I didn't have a plan in place for dealing with test data or sprocs/views that use linked servers. I explored using profiles for these, but again, I'm not terribly pleased with the result. I think, generally, it would be nicer to avoid the views/sprocs as much as possible.

5) My strategy of creating a bunch of bat files is okay, but it would be really nice if there was some sort of little UI tool for running migrations. Something where I could select (or enter) a connection string, specify a couple of flags with dropdowns/checkboxes/etc, and a button to run the migration. I think this would be preferable to having to look up all the command line flags each time (which I did often enough to annoy me, but not often enough to commit them to memory) and/or save a whole bunch of batch file variations. Maybe a standalone WinForms app, or maybe a VS plugin.

I think one of the challenges with Fluent Migrator was demonstrating its value to the rest of the team at the beginning. It seemed like a lot of extra and/or unnecessary work and bookkeeping. However, once we got a build server and deployment server running, it really paid off. Deployments became much easier: there was no more asking around and trying to figure out which versions of the database were being put where. It was one less strain towards the end of each sprint.

Welcome to the latest installment of the Brief Bio series, where I'm writing up very informal biographies about major figures in the history of computers. Please take a look at the Brief Bio archive, and feel free to leave corrections and omissions in the comments.

Ada Lovelace

Augusta Ada Byron, born in 1815 was not allowed to see a picture of her father, the Romantic poet Lord (George Gordon) Byron, until she became 20 years old. Her father never had a relationship with her. Her mother was distant as well, and even referred to Ada once as "it" instead of "her". In addition to these hurdles, Ada also suffered from vision-limiting headaches and paralyzing measles. However, if it weren't for these sicknesses, she might not be such a significant figure in the history of computing. Because she was part of the aristocracy and had access to books and tutors, she was able to learn about mathematics and technology even while sick, and picked up an interest in them.

She met Babbage in 1833 through a mutual friend. Because of her interest in technology and mathematics, she was tremendously interested in Babbage's Difference Engine. She became friends with Babbage--visiting and corresponding often.

Ada was married in 1835 and became a Baroness. After the birth of her first child, Annabella, she again suffered an illness for months. In 1838, her husband became the Earl of Lovelace. She became the Countess of Lovelace, which leads to her most commonly used moniker, "Ada Lovelace".

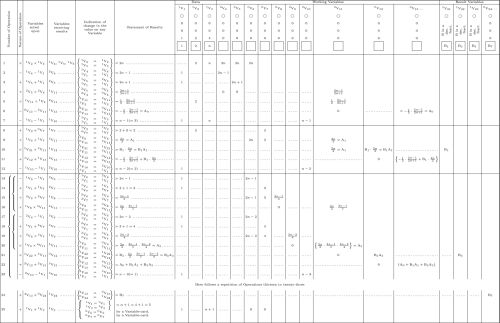

Starting in 1842, she was translating papers by Luigi Menabrea, who was writing about Babbage's proposal of the Analytical Engine. In one of these papers, Sketch of The Analytical Engine, Invented by Charles Babbage, there is a "note" or "appendix" called "Note G". This note is what many people point to as the first computer program. Not only is it the first program, it also demonstrates the core concept of the Analytical Engine: it's a more general purpose machine than any of the adding machines that have come before it. It shows that the machine would be capable of branching, addressing memory, and therefore processing an algorithm.

Paul Sinnett used Ada's algorithm to create a Scratch program, that you can try out and use to visualize the algorithm (just click "See Inside").

There is something of a controversy/dispute about Ada and this program. It's been argued that Babbage prepared the bulk of these notes for her, and therefore her contributions are not as significant. It's also argued that Babbage had already written several "programs" (albeit much simpler programs) prior to the Bernoulli program in Note G. and therefore Babbage is the first programmer, not Ada.

A notable passage that Babbage wrote about the Bernoulli algorithm in regards to Ada:

"We discussed together the various illustrations that might be introduced: I suggested several, but the selection was entirely her own. So also was the algebraic working out of the different problems, except, indeed, that relating to the numbers of Bernouilli, which I had offered to do to save Lady Lovelace the trouble. This she sent back to me for an amendment, having detected a grave mistake which I had made in the process."

So, at the very least, she created the first ever bug report. My judgement is that Ada is a significant colleague and collaborator of Babbage's. She was not just a translator or secretary; she contributed to his work in a significant and meaningful way. Babbage's work on the Analytical Engine probably wouldn't have gone nearly as far without Ada's help. Certainly she is just as worthy of celebration (every October there's an Ada Lovelace Day) as is Charles Babbage.

Ada and her husband were really into gambling. By 1851, she had started a gambling syndicate, and it's possible that she and her husband were trying to use mathematic models to get an edge on horse racing bookies. Seems like a great idea to me, given how advanced her knowledge of mathematics probably was compared to the majority of gamblers at the time; but apparenly the execution didn't work, and they ran up huge gambling debts. She died from cancer the next year at the tragically young age of 36, leaving behind three children. A short life, plagued by illness and family struggles and ended by cancer. But "the light that burns twice as bright burns half as long."

You may have also heard of the Ada programming language, which is named after her.

Ada is a language that was developed for the U.S. Department of Defense. It has an emphasis on safety, containing a number of compile-time and run-time checks to avoid errors that other languages would otherwise allow, including buffer overflows, deadlocks, misspellings, etc. At one point, it was thought that Ada would become a dominant language because of some of its unique features, but that has obviously not happened. However, it's not a dead language and lives on in specialty applications. It's #32 on the TIOBE index as of this writing, beating out Fortran, Haskell, Scala, Erlang, and many others.

Welcome to another "Weekly Concerns". This is a post-a-week series of interesting links, relevant to programming and programmers. You can check out previous Weekly Concerns posts in the archive.

- You're doing everything wrong (NSFW language). But are you really? Or are you just doing it different?

- Some free icons from GitHub.

- An infographic about new syntax in C# 6.0.

- Did I do this one already? Well I'll list it again, because I like it so much. A dress that contains all the most commons passwords on it.

If you have an interesting link that you'd like to see in Weekly Concerns, leave a comment or contact me.